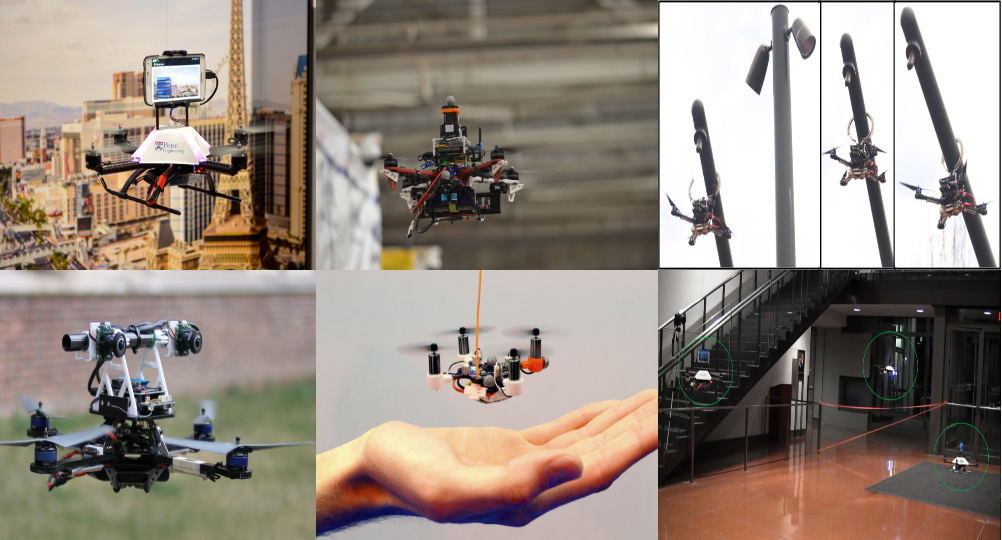

We develop real-time methods for generating dense maps for large-scale autonomous navigation of aerial robots. We investigate into monocular and multi-camera dense mapping methods with special attention on the tight integration between maps and motion planning modules.

Without any prior knowledge of the environment, our dense mapping module utilizes a inverse depth labeling method to extract a 3D cost volume through temporal aggregation on synchronized camera poses. After semi-global optimization and post-processing, a dense depth image is calculated and fed into our uncertainty-aware truncated signed distance function (TSDF) fusion approach, from which a live dense 3D map is produced.

Autonomous aerial navigation using monocular visual-inertial fusion

By Yi LIN

We present a real-time monocular visual-inertial dense mapping and autonomous navigation system. The whole system is implemented on a tight size and light weight quadrotor where all modules are processing onboard and in real time. By properly coordinating three major system modules: state estimation, dense mapping and trajectory planning, we validate our system in both cluttered indoor and outdoor environments via multiple autonomous flight experiments. A tightly-coupled monocular visual-inertial state estimator is develop for providing high-accuracy odometry, which is used for both feedback control and dense mapping. Our estimator supports on-the-fly initialization, and is able to online estimate vehicle velocity, metric scale, and IMU biases.

Without any prior knowledge of the environment, our dense mapping module utilizes a plane-sweeping-based method to extract a 3D cost volume through temporal aggregation on synchronized camera poses. After semi-global optimization and post-processing, a dense depth image is calculated and fed into our uncertainty-aware TSDF fusion approach, from which a live dense 3D map is produced. Using this map, our planning module firstly generates an initial collision-free trajectory based on our sampling-based path searching method. A gradient-based optimization method is then applied to ensure trajectory smoothness and dynamic feasibility. Following the trend of rapid increases in mobile computing power, we believe our minimum sensing sensor setup suggests a feasible solution to fully autonomous miniaturized aerial robots.

High-precision online markerless stereo extrinsic calibration

By Yonggen LING

Stereo cameras and dense stereo matching algorithms are core components for many robotic applications due to their abilities to directly obtain dense depth measurements and their robustness against changes in lighting conditions. However, the performance of dense depth estimation relies heavily on accurate stereo extrinsic calibration. In this work, we present a real-time markerless approach for obtaining high-precision stereo extrinsic calibration using a novel 5-DOF (degrees-of-freedom) and nonlinear optimization on a manifold, which captures the observability property of vision-only stereo calibration. Our method minimizes epipolar errors between spatial per-frame sparse natural features. It does not require temporal feature correspondences, making it not only invariant to dynamic scenes and illumination changes, but also able to run significantly faster than standard bundle adjustment-based approaches. We introduce a principled method to determine if the calibration converges to the required level of accuracy, and show through online experiments that our approach achieves a level of accuracy that is comparable to offline markerbased calibration methods. Our method refines stereo extrinsic to the accuracy that is sufficient for block matching-based dense disparity computation. It provides a cost-effective way to improve the reliability of stereo vision systems for long-term autonomy.

Real-time monocular dense mapping on aerial robots using visual-inertial fusion

By Zhenfei YANG

In this work, we present a solution to real-time monocular dense mapping. A tightly-coupled visual-inertial localization module is designed to provide metric and high-accuracy odometry. A motion stereo algorithm is proposed to take the video input from one camera to produce local depth measurements with semi-global regularization. The local measurements are then integrated into a global map for noise filtering and map refinement. The global map obtained is able to support navigation and obstacle avoidance for aerial robots through our indoor and outdoor experimental verification. Our system runs at 10Hz on an Nvidia Jetson TX1 by properly distributing computation to CPU and GPU. Through onboard experiments, we demonstrate its ability to close the perception-action loop for autonomous aerial robots. We release our implementation as open-source software.

Building maps for autonomous navigation using sparse visual SLAM features

By Yonggen LING

Autonomous navigation, which consists of a systematic integration of localization, mapping, motion planning and control, is the core capability of mobile robotic systems. However, most research considers only isolated technical modules. There exist significant gaps between maps generated by SLAM algorithms and maps required for motion planning. Our work presents a complete online system that consists in three modules: incremental SLAM, real-time dense mapping, and free space extraction. The obtained free-space volume (i.e. a tessellation of tetrahedra) can be served as regular geometric constraints for motion planning. Our system runs in real-time thanks to the engineering decisions proposed to increase the system efficiency. We conduct extensive experiments on the KITTI dataset to demonstrate the run-time performance. Qualitative and quantitative results on mapping accuracy are also shown. For the benefit of the community, we make the source code public.