HKUST Aerial Robotics Group

Welcome to the HKUST Aerial Robotics Group led by Prof. Shaojie Shen. Our group is part of the HKUST Cheng Kar-Shun Robotics Institute (CKSRI).

We develop fundamental technologies to enable aerial robots (or UAVs, drones, etc.) and ground robots (or UGVs, self-driving cars, etc.) to autonomously operate in complex environments. Our research spans the full stack of aerial and ground robotic systems, with a focus on state estimation, mapping, trajectory planning, multi-robot coordination, and testbed development using low-cost sensing and computation components.

Research Highlights

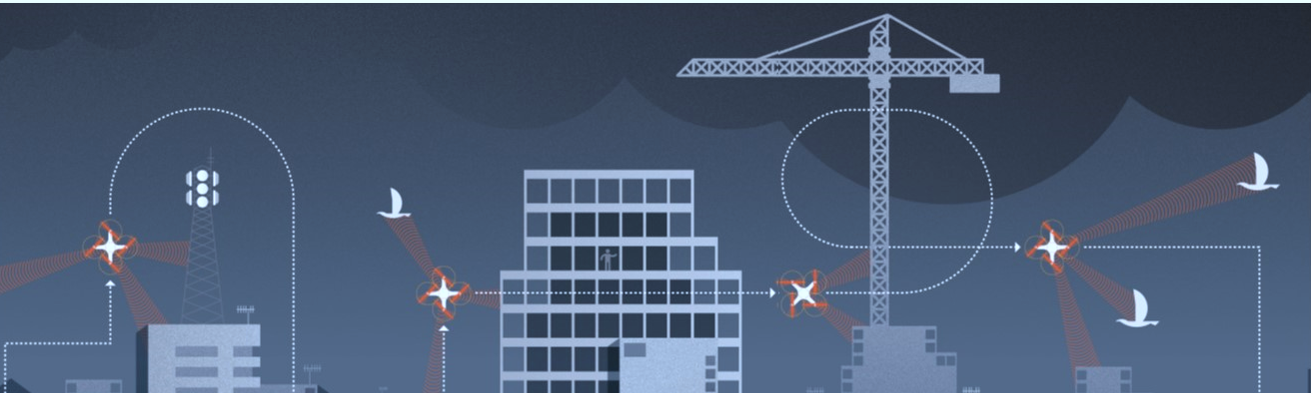

RACER: rapid collaborative exploration with a decentralized multi-UAV system

Our groundbreaking work, titled "RACER: Rapid Collaborative Exploration with a Decentralized Multi-UAV System," authored by Boyu Zhou, Hao Xu, and Professor Shen, has been awarded the 2023 IEEE Transactions on Robotics King-Sun Fu Memorial Best Paper.

We present an efficient framework for fast autonomous exploration of complex unknown environments with quadrotors. Our approach achieves significantly higher exploration rate than recent ones, due to the careful planning of viewpoints, tours and trajectories.

We further propose a fully decentralized approach for exploration tasks using a fleet of quadrotors. The quadrotor team operates with asynchronous and limited communication, and does not require any central control. The coverage paths and workload allocations of the team are optimized and balanced in order to fully realize the system's potential. Check our recent paper, videos and code for more details.

RACER

$D^2$SLAM: decentralized and distributed collaborative visual-inertial SLAM system for aerial swarm

A crucial technology in fully autonomous aerial swarms is collaborative SLAM (CSLAM), which enables the estimation of relative pose and global consistent trajectories of aerial robots.

However, existing CSLAM systems do not prioritize relative localization accuracy, critical for close collaboration among UAVs.

This paper presents $D^2$SLAM, a novel decentralized and distributed ($D^2$) CSLAM system that covers two scenarios: near-field estimation for high accuracy state estimation in close range and far-field estimation for consistent global trajectory estimation.

We argue $D^2$SLAM can be applied in a wide range of real-world applications.

Our pre-print paper is currently available at https://arxiv.org/abs/2211.01538

Authors: Hao Xu, Peize Liu, Xinyi Chen and Shaojie Shen

Videos https://youtu.be/xbNAJP8EFOU

Code: https://github.com/HKUST-Aerial-Robotics/D2SLAM

$D^2$SLAM

GVINS: tightly coupled GNSS–visual–inertial, fusion for smooth and consistent state estimation

We present a nonlinear optimization-based system, GVINS, that tightly fuses global navigation satellite system (GNSS) raw measurements with visual and inertial information for real-time and drift-free state estimation. Thanks to the tightly coupled multisensor approach and system design, our system fully exploits the merits of three types of sensors and is able to cope seamlessly with the transition between indoor and outdoor environments, where satellites are lost and reacquired. Extensive experiments demonstrate that our system substantially suppresses the drift of the VIO and preserves the local accuracy in spite of noisy GNSS measurements.

Authors: Shaozu Cao, Xiuyuan Lu and Shaojie Shen

Videos https://www.youtube.com/watch?v=TebAal1ARnk

Code: https://github.com/HKUST-Aerial-Robotics/GVINS

GVINS

FUEL: Fast UAV exploration using incremental frontier structure and hierarchical planning

FUEL is a powerful framework for Fast UAV ExpLoration. Our method is demonstrated to complete challenging exploration tasks 3-8 times faster than state-of-the-art approaches at the time of publication. Central to it is a Frontier Information Structure (FIS), which maintains crucial information for exploration planning incrementally along with the online built map. Based on the FIS, a hierarchical planner plans frontier coverage paths, refine local viewpoints, and generates minimum-time trajectories in sequence to explore unknown environment agilely and safely.

Authors: Boyu Zhou, Yichen Zhang, Xinyi Chen and Shaojie Shen

Code: https://github.com/HKUST-Aerial-Robotics/FUEL

FUEL

EMSGC: event-based motion segmentation with spatio-temporal graph cuts

We develop a method to identify independently moving objects acquired with an event-based camera, i.e., to solve the event-based motion segmentation problem. We cast the problem as an energy minimization one involving the fitting of multiple motion models. We jointly solve two subproblems, namely eventcluster assignment (labeling) and motion model fitting, in an iterative manner by exploiting the structure of the input event data in the form of a spatio-temporal graph.

Project: https://sites.google.com/view/emsgc

Video: https://www.youtube.com/watch?v=ztUyNlKUwcM

Code: https://github.com/HKUST-Aerial-Robotics/EMSGC

EMSGC

Event-based visual odometry: A short tutorial

Dr. Yi Zhou is invited to give a tutorial on event-based visual odometry at the upcoming 3rd Event-based Vision Workshop in CVPR 2021 (June 19, 2021, Saturday).

The talk covers the following aspects,

* A brief literature review on the development of event-based methods;

* A discussion on the core problem of event-based VO from the perspective of methodology;

* An introduction to our ESVO system and some updates about recent success in driving scenarios.

Workshop Webpage: https://tub-rip.github.io/eventvision2021/

Video: https://www.youtube.com/watch?v=U0ghh-7kQy8&ab_channel=RPGWorkshops

Slides: https://tub-rip.github.io/eventvision2021/slides/CVPRW21_Yi_Zhou_Tutorial.pdf

Event-based visual odometry: A short tutorial

Event-based stereo visual odometry

Check out our new work: "Event-based Stereo Visual Odometry", where we dive into the rather unexplored topic of stereo SLAM with event cameras and propose a real-time solution.

Authors: Yi Zhou, Guillermo Gallego and Shaojie Shen

Code: https://github.com/HKUST-Aerial-Robotics/ESVO

Project webpage: https://sites.google.com/view/esvo-project-page/home

Event-based stereo visual odometry

Quadrotor fast flight in complex unknown environments

We presented RAPTOR, a Robust And Perception-aware TrajectOry Replanning framework to enable fast and safe flight in complex unknown environments. Its main features are:

(a) finding feasible and high-quality trajectories in very limited computation time, and

(b) introducing a perception-aware strategy to actively observe and avoid unknown obstacles.

Specifically, a path-guided optimization (PGO) approach that incorporates multiple topological paths is devised to search the solution space efficiently and thoroughly. Trajectories are further refined to have higher visibility and sufficient reaction distance to unknown dangerous regions, while the yaw angle is planned to actively explore the surrounding space relevant for safe navigation.

Authors: Boyu Zhou, Jie Pan, Fei Gao and Shaojie Shen

Quadrotor fast flight

Code for autonomous drone race is now available on GitHub

We released Teach-Repeat-Replan, which is a complete and robust system enables Autonomous Drone Race.

Teach-Repeat-Replan can be applied to situations where the user has a preferable rough route but isn't able to pilot the drone ideally, such as drone racing. With our system, the human pilot can virtually control the drone with his/her navie operations, then our system automatically generates a very efficient repeating trajectory and autonomously execute it. During the flight, unexpected collisions are avoided by onboard sensing/replanning. Teach-Repeat-Replan can also be used for normal autonomous navigations. For these applications, a drone can autonomously fly in complex environments using only onboard sensing and planning.

Major components are:

- Planning: flight corridor generation, global spatial-temporal planning, local online re-planning

- Perception: global deformable surfel mapping, local online ESDF mapping

- Localization: global pose graph optimization, local visual-inertial fusion

- Controlling: geometric controller on SE(3)

Authors: Fei Gao, Boyu Zhou, and Shaojie Shen

Videos: Video1, Video2

Code: https://github.com/HKUST-Aerial-Robotics/Teach-Repeat-Replan

Autonomous drone racing

Autonomous drone racing

Code for VINS-Fusion is now available on GitHub

VINS-Fusion is an optimization-based multi-sensor state estimator, which achieves accurate self-localization for autonomous applications (drones, cars, and AR/VR). VINS-Fusion is an extension of VINS-Mono, which supports multiple visual-inertial sensor types (mono camera + IMU, stereo cameras + IMU, even stereo cameras only). We also show a toy example of fusing VINS with GPS. Features:

- multiple sensors support (stereo cameras / mono camera+IMU / stereo cameras+IMU)

- online spatial calibration (transformation between camera and IMU)

- online temporal calibration (time offset between camera and IMU)

- visual loop closure.

We are the TOP open-sourced stereo algorithm on KITTI Odometry Benchmark by 12 Jan. 2019.

Authors: Tong Qin, Shaozu Cao, Jie Pan, Peiliang Li and Shaojie Shen